Preface

In this lesson we will learn:

- work with JSON

- Download files

We work with JSON.

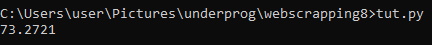

In this example, we will create a program that displays the current dollar rate.

Copy the following code.

import requests

resp = requests.get("https://www.cbr-xml-daily.ru/daily_json.js").json()

print(resp['Valute']['USD']['Value'])Yeah, that's the whole program. All it does is get data on exchange rates in jsonformat.

resp = requests.get("https://www.cbr-xml-daily.ru/daily_json.js").json().json(), converts json data to Python dictionary. From it, we got data on the dollar exchange rate.

print(resp['Valute']['USD']['Value'])

Download pictures.

In this example, we will learn how to download memesfrom the site anekdot.ru.

Rewrite this script:

import requests

from bs4 import BeautifulSoup

header = { #user-agent is required because the site is not available without it

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:88.0) Gecko/20100101 Firefox/88.0'

}

resp = requests.get("https://www.anekdot.ru/release/mem/day/", headers=header).text

soup = BeautifulSoup(resp, 'lxml')

images = soup.findAll('img')

for image in images:

try:

image_link = image.get('src')

image_name = image_link[image_link.rfind('/')+1:]

image_bytes = requests.get(image_link, headers=header).content

print(image_link)

if not image_link:

continue

with open(f'images/{image_name}', 'wb') as f:

f.write(image_bytes)

except:

print('error skipped')Let's analyze the code.

header = { #user-agent is required because the site is not available without it

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:88.0) Gecko/20100101 Firefox/88.0'

}

resp = requests.get("https://www.anekdot.ru/release/mem/day/", headers=header).textHere we got an html page, passing as a header user-agent,pass it necessarily, because if this is not done, instead of html pages, we get "Access Denied".

Next, we get all the pictures (elements with the img tag).

soup = BeautifulSoup(resp, 'lxml')

images = soup.findAll('img')In the imgtag, we look for the src argument (link to the image).

image_link = image.get('src')From the link, extract the name of the picture.

image_name = image_link[image_link.rfind('/')+1:]And, download the picture.

image_bytes = requests.get(image_link, headers=header).content.content,unlike .text and .json,represents data in bytecode format.

The resulting image is saved in the imagesfolder. First, create an imagesfolder in the script directory.

with open(f'images/{image_name}', 'wb') as f:

f.write(image_bytes)Just in case, I wrapped it all in try/exceptso that the program would miss errors.

That's all, the program now knows how to download pictures. If desired, you can make it download music and videos.